EXISTS and NOT EXISTS:

We've already discussed IN and NOT IN in part 1. We'll use the same examples tables from there, so please run those statement to create the temporary tables that we'll be using. Everything below was done in SQL Server 2012.EXISTS:

Exists is slightly different to IN. It returns a true predicate as long as one row is returned from within the subquery, whereas an IN statement will match all values from the outer query to the values from the inner subquery. So you should be able to see right away the benefit of using EXISTS over an IN statement. All that SQL Server needs to do is find one row, then it can stop searching for further matches within the inner subquery. This is commonly referred to as short circuiting and can make for a much faster query.EXISTS also allows you to match against more than one column, whereas you would have to use multiple IN statement to achieve the same thing with IN, which would be very costly. Have a look at the below example. Try adding a second predicate in the subquery.

select *

from #products

where exists

(

select *

from #orderItems

where productName = #products.productName

);

Quite often I have seen developers avoid using a * in the inner subquery thinking this will produce less overhead. For example putting in a "SELECT 1" instead. There is no need to do this. Remember the EXISTS is simply checking to see if a row from the subquery is returned. It doesn't care about the columns, so doesn't include them anyway. Try running one against the other and checking out the execution plans. You'll see they will be exactly the same.

Of interest: You'll also notice in the execution plan, that it's done a TOP (1) on the subquery.

NOT EXISTS:

NOT EXISTS is similar to EXISTS, of course, but will only evaluate as true if NO results are returned by the subquery. Try the following:select *

from #products

where not exists

(

select *

from #orderItems

where productName = #products.productName

);

Now we don't have the same problem that we had with NOT IN when it returned nulls. But what if we wanted to compare two similar tables to see if any rows differ. For example, you are bringing in data from one system to another, but don't want to insert or update any rows if an exact matching row already exists in the destination table.

Let's make another product table and alter some of the data:

insert into #products (productName,price) values (null,0);

select * into #products2 from #products;

update #products2 set productName = null where productID = 6;

Now lets try and highlight any rows that differ between the two product tables when comparing against ProductID and ProductName:

select productID,productName from #products p1

where not exists

(

select *

from #products2

where productID = p1.productID

and productName = p1.productName

);

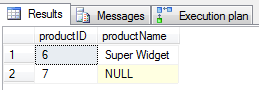

This returns the following:

Row 1: Great. It recognised that product 6 was different. #Product2 had the product name for this item as null. #Product did not.

Row 2: Not what we wanted. Product 7 is exactly the same in both tables, so why has it shown up here. Remember what we discussed in the previous post about nulls and that they essentially mean "unknown." When comparing two unknowns, the end result will also be unknown. So it cannot match the two.

You could of course use the ISNULL function for both ProductName columns, but this adds overhead, especially if you have many columns to compare.

This leads us on nicely to the next chapter where I will go over EXCEPT and INTERSECT.

Till then, MERRY CHRISTMAS!