Alarm bells should already be ringing.

Scenario

You drag yourself groggily down the stairs after hitting snooze a few times and fumbling around for your robe while trying not wake up your spouse and kids. Logging in while switching between yawns and sips of coffee, hoping you don't make a cock-up while your brain is half asleep and all your other colleagues are out of reach. Then wait for the application guy to do their thing, all the while thinking... "I could be sleeping though this bit." Get the go ahead. Your heart drops when you realise you started while forgetting to check something because you're just that tired. Thankfully all is ok. Now wait again for the app to start and then the tests. Go back to bed. Great. Now you're wide awake at 5 am and lie staring at the back of your eyelids for two hours... and for what? Just to push a few buttons? AAAAAH!Avoiding user impact like this has its risks. I don't think I need to point out why doing anything in a production system when your body is screaming at you to go to sleep, or when all the other experts that could support you are asleep, is a bad idea.

Productivity for the rest of the day will also be impacted. All in all it's a risky AND expensive operation.

Also, for the guys involved... it just sucks.

Automation:

Do we really want an expensive resource being paid to simply push buttons? Why the hell is a DBA doing a deployment in the first place? Automation is obviously the proverbial elephant in the room. Get it done! There are some great tools out there to assist with this, like Octopus Deploy, DbUp, SSDT, Bamboo, PowerShell and much more. Now the DBA can sleep. Maybe just be on call. But we're still doing deployments out of hours...Deploying the application in hours:

You want to deploy during office hours, but without impacting users. Have you heard of Blue / Green deployments? You effectively have an offline and online production system. You deploy to the offline one, run all of your tests and when all is good, switch. Your offline becomes your online and users won't notice a thing. Something's not right? Fine. Just don't switch. No rollback required. This is particularly great for legacy or monolithic applications.Another option might be rolling deployments. Better for load balanced applications and micro service platforms like Service Fabric. You deploy to one server / service at a time.

And you could probably do stuff with containers and other cool stuff, but let's face it... if you're having this problem then you're probably dealing with legacy or monolithic type apps.

Deploying the database in hours:

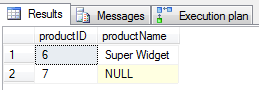

Dealing with data is usually harder than just the apps. But in this regard, it should actually be simpler. You just have to take care with how you develop and prepare your release. If you've taken care of the automation you're pretty much good. Just take care with destructive changes. Stagger them. If you need to delete a column, make the change in the app first. Then in a future release when the column is no longer being called, drop the column then. Make sure you've load tested your deployment in your staging environment so you know there shouldn't be any user impacting changes. Develop your code and test well, and you're good.So it may take some work to get to normal hour deployments but it can most certainly be done, and with many other side benefits to be found.